Alignment using ORPO

I explored ORPO yesterday with the intrepid Sam at the Recurse Center. We worked through HuggingFace’s smol course ORPO chapter, but there is honestly not much code or helpful explanation in the notebook. I got the most useful understanding out of reading the first part of the official ORPO paper on arxiv.

What the heck is ORPO?

ORPO stands for “Odds Ratio Preference Optimization”, and it’s a method for aligning a model (as an alternative to DPO or RLHF). Model alignment is concerned with making a trained model adhere more closely to human preferences after fine tuning. When we fine tune a model, we can make it more useful for a target domain, but we might still get undesirable responses. “Aligning” the model helps sharpen the responses so we’re more likely to get the kinds of responses we prefer.

Sam and I previously explored alignment via DPO(“direct preference optimization”), which takes a fine tuned model and then improves it by training it on a specific perference dataset.

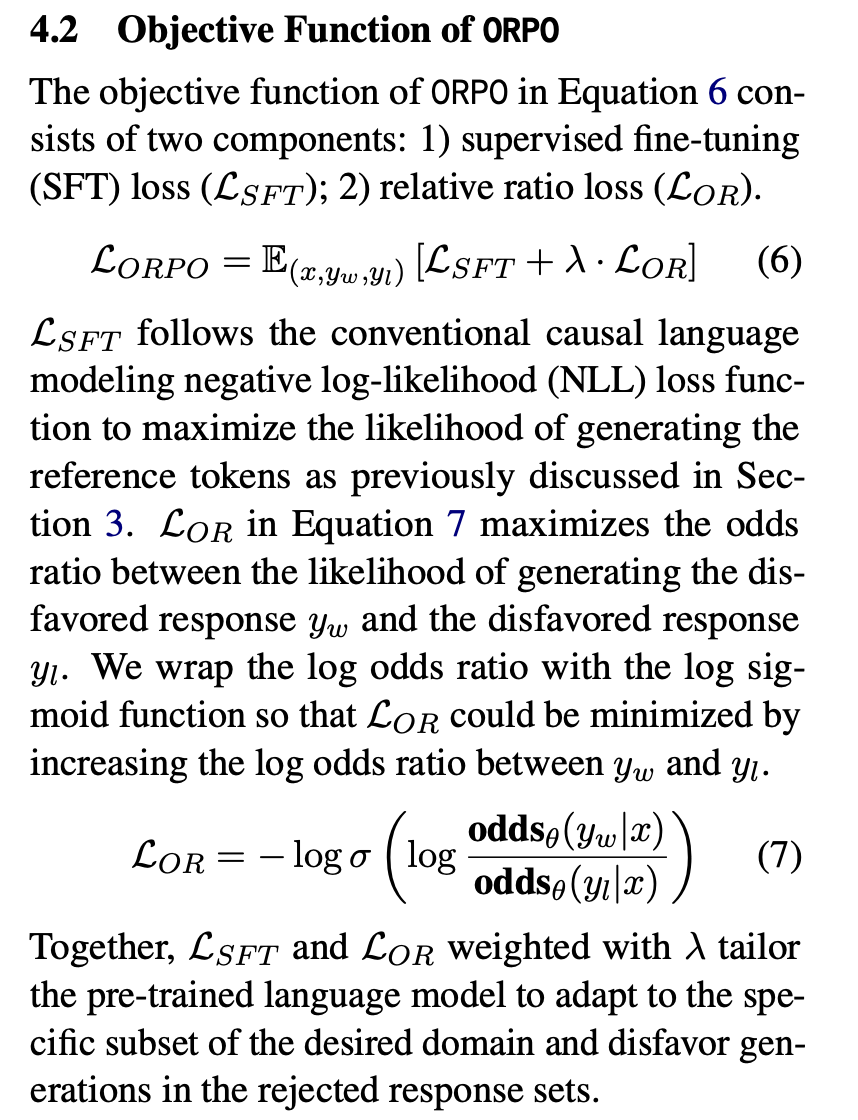

While DPO involved two separate steps (SFT then alignment), ORPO lets us combined both steps into a single process. This combined approach is its key innovation, and allows it to be much more efficient while still being powerful. It does this with a really fancy loss function that has two parts: an “SFT loss” and an “odds ratio loss”.

What is a loss function?

A loss function is how we can measure how well a model is performing at a given task. It helps us judge how “wrong” a model’s prediction was.

The SFT loss we use in ORPO is the “negative log likelihood”, which is the conventional loss function for language models. (We use negative logs because it turns a probability maximization goal into a loss minimization one).

Why can’t we just do supervised fine tuning? Why do we need an alignment step?

From the ORPO paper:

SFT plays a significant role in tailoring the pre-trained language models to the desired domain (Zhou et al., 2023a; Dong et al., 2024) by increasing the log probabilities of pertinent tokens. Nevertheless, this inadvertently increases the likelihood of generating tokens in undesirable styles … Therefore, it is necessary to develop methods capable of preserving the domain adaptation role of SFT while concurrently discerning and mitigating unwanted generation styles.

and:

While cross-entropy is generally effective for domain adaptation (Mao et al., 2023), there are no mechanisms to penalize rejected responses when compensating for the chosen responses. Therefore, the log probabilities of the tokens in the rejected responses increase along with the chosen responses, which is not desired from the viewpoint of preference alignment.

My translation: SFT makes a model better at tasks in a specific domain, but our regular SFT loss approach means we get better at mimicking all patterns in a domain, even undesired patterns.

So how does ORPO work?

From the ORPO paper:

We introduce a novel preference alignment algorithm, Odds Ratio Preference Optimization (ORPO), which incorporates an odds ratio-based penalty to the conventional negative log-likelihood (NLL) loss for differentiating the generation styles between favored and disfavored responses.

When we calculate the loss in ORPO, we combine the SFT loss (“how well does the model predict the preferred output?”), and the odds ratio loss, which I think of as “how much more likely it is for the model to generate the response we want vs the response we don’t?”

The odds ratio tells us about the model’s preferences, but we need to turn this into a loss signal that can guide training. We want:

- High loss when the model prefers responses we don’t like

- Low loss when the model prefers responses we do like

Ideally, we also want numbers that are easy to deal with mathematically, so we do some log + sigmoid stuff to the odds ratio:

We wrap the log odds ratio with the log sigmoid function so that LOR could be minimized by increasing the log odds ratio between yw and yl…

This combination of SFT loss and odds ratio loss means we get an objective function that allows for domain-tailoring AND pushes the model to favor patterns we like over patterns we don’t.

Published on: 2025-01-09